Whatever happened to the multi-armed bandit?

I'm not sure why, but some time this week while getting miserably sick with the latest cold the kid brought home, I thought about multi-armed bandit models. More precisely, I realized that I haven't heard anything about them in many years.

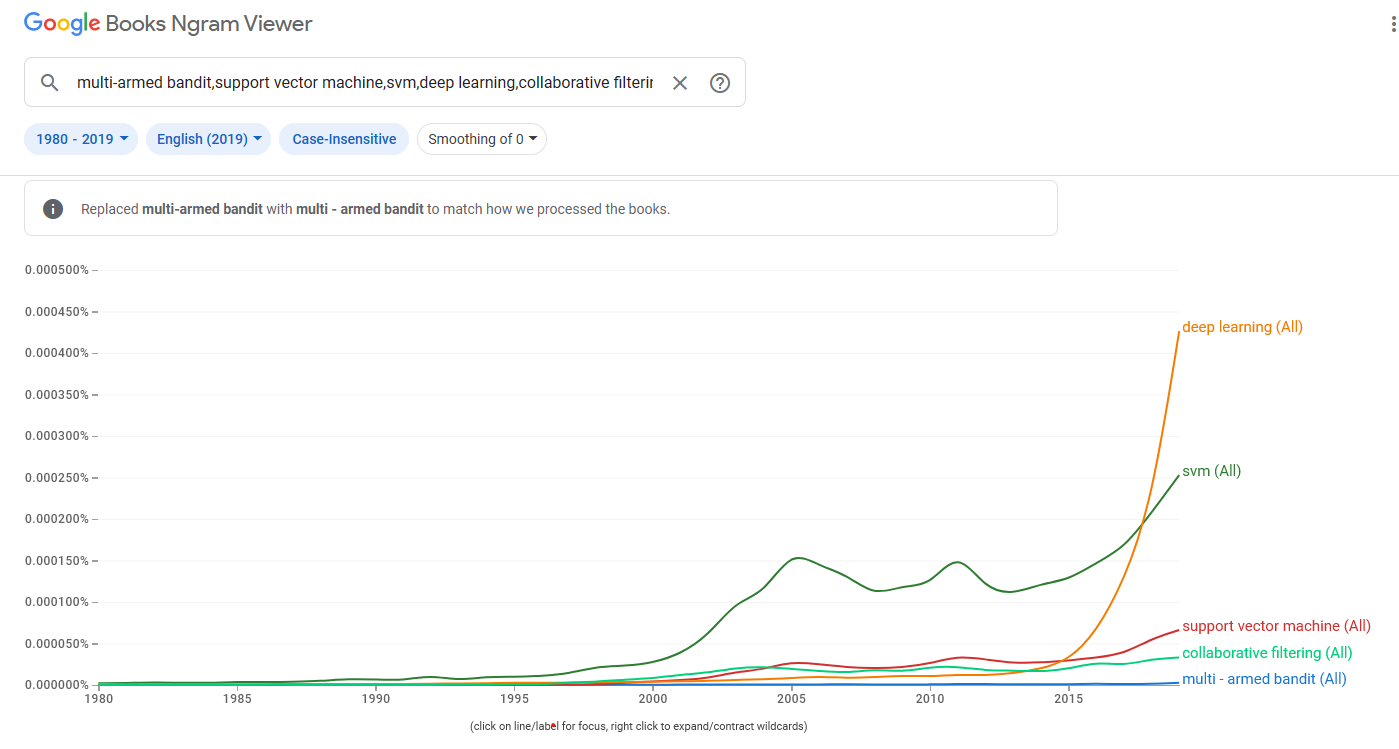

For context, there was a very brief period (maybe around 2010-2012?) when data science was gaining traction as "a thing" when there seemed to be a mini fad around exploring the use of such models. After all, if A/B tests were the poster child method for DS at the time, then multi-armed bandit models seemed to promise a straight upgrade. Working with many variants, with automatic learning of "the best" variant seemed like a natural improvement in experimental methodology. Surely, most simple A/B tests could be implemented as a bandit model and then we can our spend time doing other, more important things instead of the tedious bookkeeping setting up and calling dozens of small experiments.

That imagined future has obviously not arrived.

We very often joke that in industry, everything is either a t-test or linear regression. This is because they tend to work good enough across so many problems that we rarely need to look further. Only on rare occasions do we find a situation that fits all the pre-conditions that make using a more advanced model worth the effort. And so, over the weekend, I went on a bit of a internet search quest to try to just see if there were situations that companies say they're actively employing bandit models out in product.

If you know of such a system being used in production, please tell me where it's being used and how. I'd love to know more and maybe write about it!

From an initial cursory search, it seems that companies rarely boast about using multi-armed bandit models. Maybe it's because companies consider it part of their "secret sauce"? My suspicion is that either multi-armed bandit models are considered too technical to discuss casually in a blog post, while also being considered too dated to boast about during the current AI hype.

Another annoyance is when you search for academic papers and you find lots of proposed applications instead of... actual applications. Like, I can propose that people use a bandit model to decide whether families should use it for meal planning. Doesn't mean anyone in their right mind will ever do so. I'd then have to chase down those references to see if such a model was actually being used or whether it was just a theoretical exercise. I quickly grew tired of that.

But what applications could I find out there? Here's a sampling of interesting ones I could find out in the wild.

The most prominent applications seemed to be for e-commerce/web applications. I saw a few places that claimed to use a kind of bandit model framework to automatically evaluate huge combinations of different UI treatments for their site – effectively treating the bandit model as a higher level treatment selector. Other mentions leveraged the same ability of "pick from a fixed list of items to see which is best" to select all sorts of parameters: which model/algorithm to use, prices, etc.. A 2020 example I found from StichFix gives an example of how such systems get implemented. For a system that is largely "take a list of candidates, pick one, record down the outcome reward, repeat", these systems take a surprising amount of text to explain.

During my browsing, I didn't quite get to see someone say they input the entire HTML hex color palette into a bandit model to pick the optimal colors of links, but I wouldn't be too surprised if someone's tried before. In fact, I couldn't find any examples for the more basic, trivial applications like "find the best email subject line". Those examples all lived in tutorials and it makes me wonder if people are using such models live and just won't say so since it would seem too simplistic and unoriginal.

While poking around e-commerce, I came also across this 2023 post from Instacart about how they implemented "Contextual Bandits", a generalization of the multi-armed bandit that takes actions based on the context (e.g. for user1 living in NYC, use this action, while for user2 in Seattle, use another). Their paper was mostly about overcoming the difficulty of collecting data when your potential feature space becomes extremely large.

Trying to find examples OUTSIDE of e-commerce situations has proven challenging. I did stumble upon a recent paper that cited various industries using bandit models. The problem is that they often cite other papers where the authors "propose" the use of a bandit model – which I find a bit weak in terms of being evidence of actual use.

But despite those reservations, they did cite specific examples in healthcare where a bandit model was used to help get better data collection for a clinical trial.

The same paper also lists a bunch of potential uses cases in using a bandit model to do things like hyperparameter searching, The ML community of course has devoted plenty of research into algorithms for hyperparameter search, and I'm willing to bet that the state of the art algorithms from the ML literature are probably better than a bandit-based exploration approach. This is why it's tricky to gauge actual utility from academic papers.

Still, it's a bit heartening that quietly in the background, these models are still being used in interesting ways. They're not being used in the many naive situations that we had originally envisioned in 2011. Nor are they "better" than the traditional A/B tests that we run. But there's a small niche for them – which is pretty cool.

Other references

Here's some other papers I came upon during my brief search for applications.

Standing offer: If you created something and would like me to review or share it w/ the data community — just email me by replying to the newsletter emails.

Guest posts: If you’re interested in writing something a data-related post to either show off work, share an experience, or need help coming up with a topic, please contact me. You don’t need any special credentials or credibility to do so.

About this newsletter

I’m Randy Au, Quantitative UX researcher, former data analyst, and general-purpose data and tech nerd. Counting Stuff is a weekly newsletter about the less-than-sexy aspects of data science, UX research and tech. With some excursions into other fun topics.

All photos/drawings used are taken/created by Randy unless otherwise credited.

- randyau.com — Curated archive of evergreen posts. Under re-construction thanks to *waves at everything

Supporting the newsletter

All Tuesday posts to Counting Stuff are always free. The newsletter is self hosted, so support from subscribers is what makes everything possible. If you love the content, consider doing any of the following ways to support the newsletter:

- Consider a paid subscription – the self-hosted server/email infra is 100% funded via subscriptions

- Share posts you like with other people!

- Join the Approaching Significance Discord — where data folk hang out and can talk a bit about data, and a bit about everything else. Randy moderates the discord. We keep a chill vibe.

- Get merch! If shirts and stickers are more your style — There’s a survivorship bias shirt!